News

28 January 2025

Deep Seek & the Recent Market Dynamics

The success of DeepSeek with the release of their reasoning language model R1 is making the impact of recent open source development more obvious to the market.

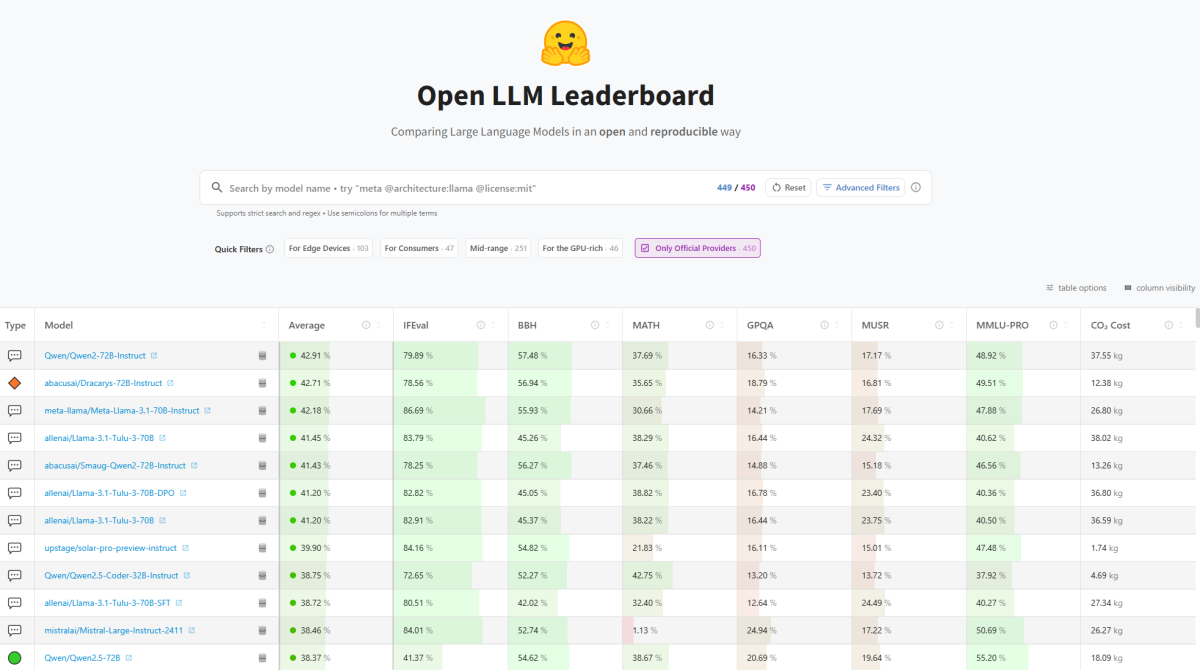

1) Smaller models have for years now closely trailed state of the art models with a fraction of the number of parameters and fraction of compute needed. The age of exponential growth of models seems over, as we find better techniques than brute force scaling to learn more efficiently. In recent years (cf. Below) the focus of the open-source community has been to build smaller models close in accuracy to their much larger counterparts, but 100 times smaller and more economic to train/use. Biggest players on these open-source lightweight Large Language Models have been Meta (with Llama models), Google (Gemma), Ali Baba (Qwen), Mistral (Mixtral) and now DeepSeek.

2) China vs. US: Chinese labs have been leading the AI front with US labs for years now. Chinese Large Language Models (LLMs) and derived models have topped open-source leaderboards for quarters. Not new with DeepSeek.

3) The most recent model developed by DeepSeek, R1, is particularly impactful as its reasoning capabilities match and sometimes are ahead of OpenAI o1 model.

Process of training DeepSeek-R1 making the headlines today:

A first base model, DeepSeek-V3_Base, was built using a Mixture-of-Experts approach, which has the advantage of being very efficient, 37bn only of 671bn parameters being activated for each token.

Pure Reinforcement Learning (learning from the quality/coherence of text outputs without a specific target/supervision) is then used to improve this base model, to make it capable of reasoning, getting DeepSeek-R1-Zero. Innovations like learning from group of outputs rather than individual outputs helps this RL be very efficient in terms of training cost.

A succession of supervised fine-tuning and reinforcement learning sequentially helps further improve/refine the model and its outputs.

The final model, DeepSeek-R1, beats OpenAI o1 at maths (AIME 224 benchmark) and matches its results at a variety of tasks. Given the efficiency of the mixture of experts model, using it costs a fraction of an o1 model (R1: $0.55 per million input tokens and $2.19 per million output tokens vs o1: $15 per million input tokens and $60 per million output tokens)

4) DeepSeek has also open sources smaller distilled models of 7bn to 70bn parameters (built with open-sources Llama and Qwen models) which are beating all models of similar sizes across reasoning, maths and coding tasks, which can be run on smaller GPU infrastructures and offer larger integration potential.

5) OpenAI dominance: the fact that DeepSeek today provides the world with a much cheaper alternative to o1 and also open-sourced very strong smaller models is a clear threat to OpenAI, which is still burning billions in employee and model training costs a year.

6) Nvidia: the (not new) development of much smaller “lightweight” models which closely trail the performance of models magnitudes larger is a welcome news for users, for the ecology, but bad news for Nvidia and infrastructure providers. The trend to more efficient models originated from open-source efforts and the expected trend of specialization of models (Mixture of Experts could be considered as part of that trend) should lead to a much more efficient use of LLM for each domain of expertise. The continuous improvement of training techniques to miminize training costs (after years of brute force of piling neural network layers) should also contribute to a slowdown in the growth of AI-related Capex.

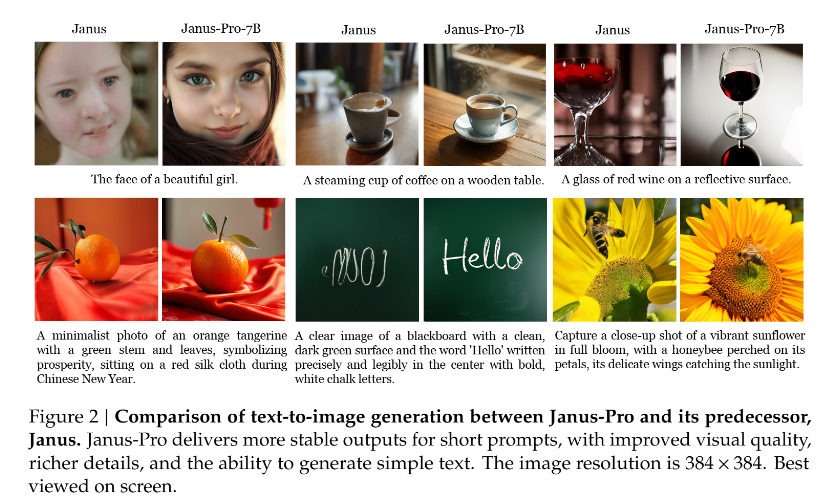

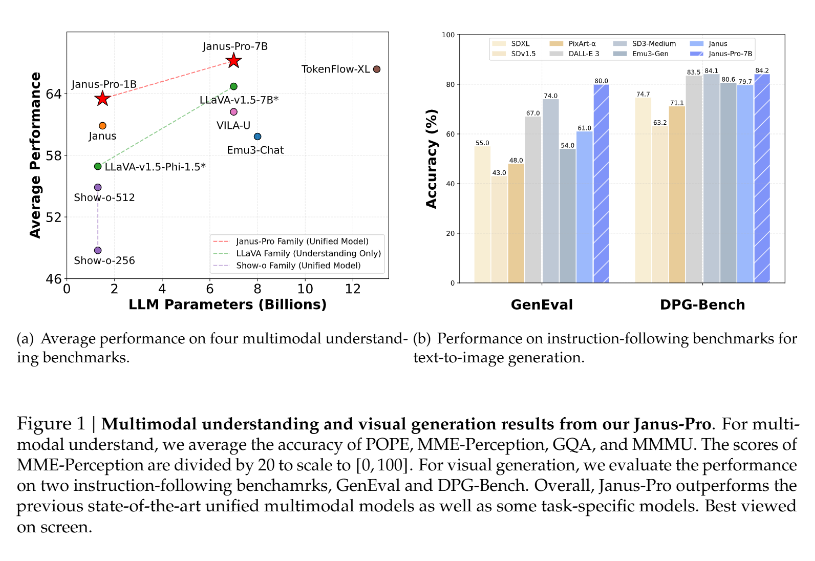

7) In a remarkable twist, DeepSeek announced on Monday January 27th Janus-Pro-7B, an advances version of their former Janus model, which beats OpenAI DALL-E 3 at text-to-image generation, a new attack on OpenAI AI current dominance. The model is open-source and available on DeepSeek’s github page.

Disclaimer

Important Information:

The figures, comments, opinions and/or analyses contained herein reflect the sentiment of RAM with respect to market trends based on its expertise, economic analyses and the information in its possession at the date on which this document was drawn up and may change at any time without notice. They may no longer be accurate or relevant at the time of reading, owing notably to the publication date of the document or to changes on the market.

This document is intended solely to provide general and introductory information to the readers and notably should not be used as a basis for any decision to buy, sell or hold an investment. Under no circumstances may RAM be held liable for any decision to invest, divest or hold an investment taken on the basis of these comments and analyses.

RAM therefore recommends that investors obtain the various regulatory descriptions of each financial product before investing, to analyse the risks involved and form their own opinion independently of RAM. Investors are advised to seek independent advice from specialist advisors before concluding any transactions based on the information contained in this document, notably in order to ensure the suitability of the investment with their financial and tax situation.

Past performance and volatility are not a reliable indicator of future performance and volatility and may vary over time and may be independently affected by exchange rate fluctuations.

This document has been drawn up for information purposes only. It is neither an offer nor an invitation to buy or sell the investment products mentioned herein and may not be interpreted as an investment advisory service. It is not intended to be distributed, published or used in a jurisdiction where such distribution, publication or use is forbidden, and is not intended for any person or entity to whom or to which it would be illegal to address such a document. In particular, the investment products are not offered for sale in the United States or its territories and possessions, nor to any US person (citizens or residents of the United States of America). The opinions expressed herein do not take into account each customer’s individual situation, objectives or needs. Customers should form their own opinion about any security or financial instrument mentioned in this document. Prior to any transaction, customers should check whether it is suited to their personal situation, and analyse the specific risks incurred, especially financial, legal and tax risks, and consult professional advisers if necessary.

The information and analyses contained in this document are based on sources deemed to be reliable. However, RAM Active Investments S.A. cannot guarantee that said information and analyses are up-to-date, accurate or exhaustive, and accepts no liability for any loss or damage that may result from their use. All information and assessments are subject to change without notice. Subscriptions will be accepted only if they are made on the basis of the most recent prospectus and the latest annual or half-year reports for the financial product. The value of units and income thereon may rise or fall and is in no way guaranteed. The price of the financial products mentioned in this document may fluctuate and drop both suddenly and sharply, and it is even possible that all money invested may be lost. If requested, RAM Active Investments S.A. will provide customers with more detailed information on the risks attached to specific investments. Exchange rate variations may also cause the value of an investment to rise or fall. Whether real or simulated, past performance is not a reliable guide to future results. The prospectus, KIID, constitutive documents and financial reports are available free of charge from the SICAV’s head office , its representative and distributor in Switzerland, RAM Active Investments SA, and the distributor in Luxembourg, RAM Active Investments SA. This document is confidential and addressed solely to its intended recipient; its reproduction and distribution are prohibited. Issued by RAM Active Investments S.A. which is authorised and regulated in Switzerland by the Swiss Financial Market Supervisory Authority (FINMA).

No part of this document may be copied, stored electronically or transferred in any way, whether manually or electronically, without the prior agreement of RAM Active Investments S.A.